Technology drives forward steadily, leading to the creation of a new digital manipulation way: deepfakes. Fake photos, videos and speech can be utterly realistic that become hard to know how to guarantee authenticity and distinguish from reality.

The rise of synthetic media usage has led us to question the integrity of these resources. Social media can create manipulated content, for instance, using cloning voices to post fake news and creating videos with the voices and faces of celebrities who have already passed away.

This phenomenon has spread beyond famous personalities to ordinary people, and its realism has provoked companies to question the possibility that their customers’ digital identities can be impersonated.

Therefore, it is vital to get to the bottom of deepfakes current impact and know how they affect our lives, especially regarding identity verification.

In this article, we explore what deepfakes are, how to guarantee authenticity, their social impact and how counting with tools to fight malicious usage ensure secure and trustworthy future.

A brief history of deepfakes

The term “deepfake” was born on the 2nd of November 2017 when a Reddit user created a forum with the name deepfake. The community aimed to develop and use deep learning software for synthetic face swapping of celebrities in pornographic videos. One year later, it was deleted. Nevertheless, the spreading of deepfakes has been unstoppable since then.

In the beginning, deepfakes were mainly used to entertain users with comical and artistic content. However, their use has been laid out for other spiteful purposes, such as harassing individuals and disseminating false information.

As deepfake technology has advanced and becomes more accessible, it has become increasingly difficult to differentiate between what is real and what is fake in online content. This fact has raised concerns about information integrity, manipulation and privacy.

To answer these concerns, organisations and researchers are developing technologies and strategies to spot and prevent deepfakes. But, before referring to them, it is critical to understand what exactly a deepfake is.

What deepfakes are?

In general terms, deepfakes are manipulated videos, voices or photos that use artificial intelligence and deep learning algorithms to change or falsify the appearance or actions of an individual.

Let’s apply the definition to the identity verification industry. To this industry, it is a manipulation technique that uses deep learning algorithms as technology to impersonate an individual’s identity. The impersonation can be done through the voice, that is, modifying voice fragments to seem like a person has said things they have not said or generating words modulated with another vocal register. Furthermore, deepfakes can swap people’s faces.

Some examples of deepfakes are:

-

Kyiv leader deepfake fooled mayors across Europe.

-

A deepfake pushes Bolsonaro ahead of Lula in the presidential poll.

How are they made?

Synthetic audiovisual media are created with deep learning techniques. The best known are Generative Adversarial Networks (GAN). This model is based on two neural networks known as generator and discriminator, which aim to compete with each other. The generator falsifies faces, and the discriminator checks if these faces are real or false.

The generator aims to study how the discriminator classifies these faces. It does this by analysing changes in skin colour, appearance or lines. The discriminator must identify whether the rendered face is real or fake.

While the discriminator performs these analyses, the generator generates more realistic fakes until it reaches a point where it manages to confuse the discriminator.

Both networks are connected and provide feedback to each other. The process is repeated countless times. In such a way, with the classifications of the discriminator, the generator will create better copies, and with what the generator creates, the discriminator will classify them better in terms of detection.

Become a discriminator for a while

Then, how can a deepfake video be generated?

Entertainment apps such as Reface or ZAO in China are used to create a deepfake video. Both applications allow facial features to be altered through the use of filters.

Nevertheless, it is necessary to have a computer with high data processing and use programs to allow face swappings, such as Faceswap and Deepfake Lab, to create better-quality video deepfakes.

To create a deepfake you need a set of source and target files. The program uses both files, extracting the frames and creating a target mask from the source file.

Both faces are then fed into the network. The network learns the features of the source face and the features of the target face, and as the interactions go by, it manages to define the features.

It is an artisanal process requiring a large amount of data with facial expressions and references of a person. It is a complex process that does not generate realistic digital masks to date.

Deepfakes consequences on society

Deepfakes gain popularity and cause concerns about their consequences as technology advances. Some of these consequences are:

-

Lack of information and propaganda: deepfakes spread false information and influence political campaigns along with shallowfakes, which can significantly impact opinion formation and political decisions.

-

Reputational damage: they can make someone appear to say or do something they did not do, damaging their reputation severely.

-

Privacy invasion: some deepfakes use original photos or videos of people, which can invade their privacy.

-

Identity fraud: many companies are worried that deepfakes can falsify a customer’s identity in online verification processes.

![]() What is the difference between deepfake and shallowfake? The main difference is that creating a shallowfake uses simple video editing software rather than machine learning technology.

What is the difference between deepfake and shallowfake? The main difference is that creating a shallowfake uses simple video editing software rather than machine learning technology.

Deepfakes and identity theft

Deepfakes growth and the unstoppable development of AI have caused companies to question if this phenomenon could risk their digital onboarding processes.

Users are asked to show their ID and take a selfie in KYC processes. Furthermore, these processes include liveness detection, where the user is usually asked to move. This movement can differ depending on the process; for example, the user may be asked to move their head from side to side, blink, smile, or register facial expressions that appeal to emotions such as happiness or sadness. The randomness of movement in each process means that video generation and playback in online registration processes turn more complicated.

Biometric spoofing through deepfakes is not accessible if anti-spoofing techniques are incorporated to detect presentation attacks and identify if the biometric data corresponds to a live person or has been manipulated.

Furthermore, companies usually add security layers beyond anti-spoofing techniques for biometric data. Hence, they can implement remote identification processes via videoconferencing. Real-time verification of the process by an agent adds an extra layer of security and leaves less room for deepfakes.

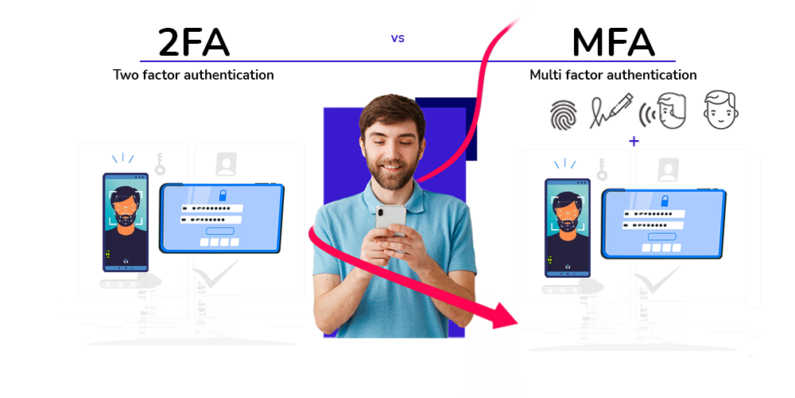

Another option is to use multi-factor authentication (MFA) in the processes, which operates several authentication factors to ensure the reliability of the user’s identity. The combination of inheritance factors (facial recognition or fingerprint scanning) with recognition factors (one-time password – OTP) is the most widespread.

Technologies to detect deepfakes and guarantee authenticity

Advanced technologies for detecting deepfakes include an image, video, voice, speech, language, and error-level analysis techniques. Each technique aims to discover specific patterns. For example:

- Facial motion analysis: detects suspicious patterns in facial animation that are unnatural and synonymous with manipulation.

- Audio analysis: detects discrepancies in lip sync, audio quality, and frequency.

- Texture analysis: detects suspicious patterns in skin and hair texture.

- Metadata analysis: verifies the authenticity of video metadata, such as the date and time of creation and geographic location. Normally, the metadata does not match the metadata of an actual video.

- Error level analysis: analyses several frequencies of the video or performs a spatiotemporal analysis of the video to detect, for example, face point mismatches.

How does Mobbeel detect deepfakes?

Mobbeel’s R&D&I department specialises in detecting identity theft attacks. It uses different techniques to identify artefacts and 2D presentation attacks, static and dynamic, using photos, videos, masks, or paper. It also has tools to detect 3D attacks.

In addition to the techniques for detecting presentation attacks when registering new customers, Mobbeel has the SINCERE project. Through this project, Mobbeel has developed an advanced deepfake fraud detection system based on metadata and error-level analysis.

Furthermore, it uses deep learning techniques such as Vision Transformers, with results of over 80%.

Recommendations for distinguishing a deepfake

Being equipped with state-of-the-art deepfake detection technology to prevent fraud is important, but it is also highly recommended to pay attention to details, such as:

- Blurred face.

- Lack of light in the eyes.

- Mismatches in facial expressions.

- Faulty perspective.

- Facial occlusion.

- Absent or excessive blinking.

The importance of identity verification in a world of deepfakes

Identity verification is more important than ever in a world of deepfakes. Deepfakes can be used to impersonate a person’s identity, posing a danger to businesses.

Digital identity verification can prevent these risks by confirming a user’s identity before allowing them to purchase services or products. It can include ID scanning and biometric verification through facial recognition with proof of life.

Implementing rigorous identity verification processes such as Mobbeel can help prevent deepfakes from being used to impersonate people online.

As a bonus, you can watch the talk “Deepfakes: with great power comes great responsibility” given by our colleague Ángela Barriga, R&D researcher at Mobbeel, at the Extremadura Digital Day.

Fee free to contact us if you are afraid of the risks that deepfakes can cause in your company.

I am a curious mind with knowledge of laws, marketing, and business. A words alchemist, deeply in love with neuromarketing and copywriting, who helps Mobbeel to keep growing.

GUIDE

Identify your users through their face

In this analogue-digital duality, one of the processes that remains essential for ensuring security is identity verification through facial recognition. The face, being the mirror of the soul, provides a unique defence against fraud, adding reliability to the identification process.