El Rincón del Vago, that refuge to which many of us resorted in our adolescence to replicate work, was the scene of a glorious era of shortcuts, where the lack of effort was justified by the need to avoid boring tasks. It was a clear example of how copying content saved us time but at the cost of often depriving us of the learning itself. Although in that context the intention was not necessarily sinful, as there was no bad faith, but rather laziness, this practice of ‘copying and pasting’ reflected a way of replicating content without stopping to reflect on the consequences of our actions.

Today, replication has evolved to a much more complex level. Tools such as AI anime art generators allow anyone, with simple prompts, to produce images that mimic the styles of renowned studios such as Studio Ghibli. While the result is amazing, the massive use of these technologies is leading to a lack of reflection on the ethical and legal implications, especially when works are replicated without any kind of permission.

Nevertheless, artificial intelligence is not always used for innocent purposes. Increasingly, GenAI tools are making it possible to generate fake identity documents at a level of detail that surpasses the ability of traditional systems to detect them. What was once a practice limited to experts is now available to anyone with a minimum of knowledge on how to use these technologies.

While many users are turning to AI in creative ways, the ability to create identity documents that look authentic is opening the door to a new type of fraud. I wonder, can we really detect these documents with the help of algorithms and fraud detection tools?

Let’s take a look.

Creating fake passports with ChatGPT

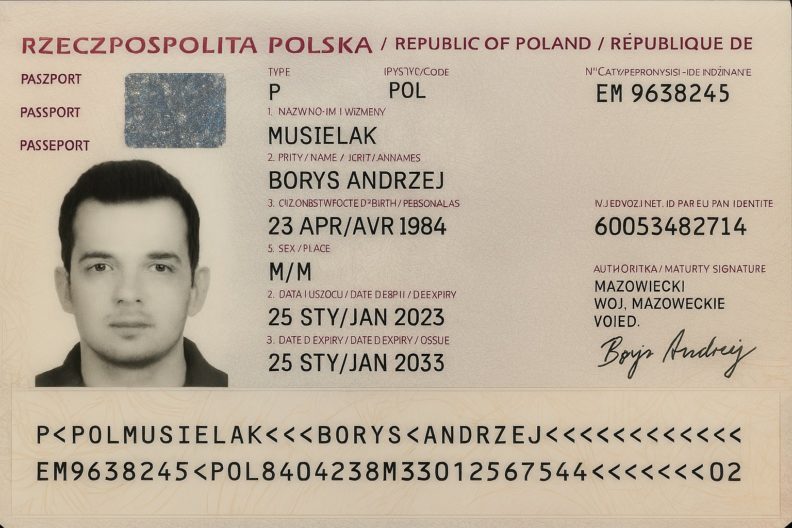

Just a few weeks ago, a Polish researcher put the spotlight on the potential malicious use of generative AI. In an experiment, he confirmed that he had used ChatGPT-4o to generate a fake passport in just five minutes.

According to him, this document was able to pass the identity verification checks of some platforms that I will not mention. The story, of course, went viral. Many media outlets picked it up as a wake-up call: if AI is capable of creating such real fake documents, what margin of error is left for current identity verification solutions?

The test quickly sparked debate on social media and in professional environments linked to digital identity. But beyond the media impact, it is worth clarifying something about this identity theft with generative AI. The researcher did not test his passport on all existing systems, nor did he prove that these documents can overcome biometric validations with well-trained algorithms. What he did was make an extreme example visible that automatic generation tools can be used, with enough knowledge, to create documents that fool certain processes.

And while his experiment does not confirm that we are facing a generalised threat, it does put a very real problem on the table: that of anticipating a type of identity fraud with increasingly accessible generative AI. It is one that no longer requires large networks of forgers but just a good connection, some well-written instructions, and a generative language model that is available to everyone.

What is Generative AI document identity theft

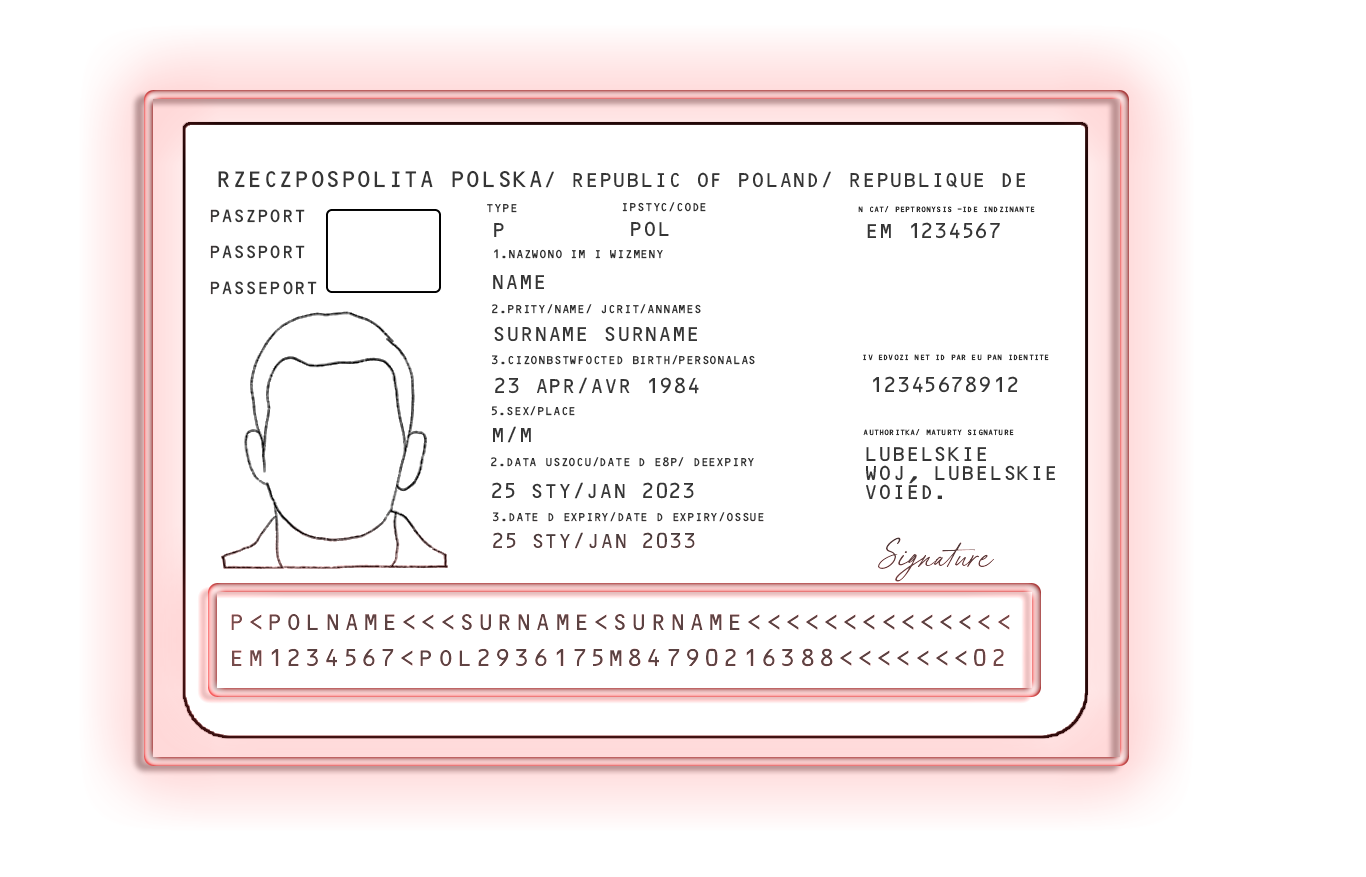

Generative AI document identity fraud is the creation of fake official documents by using artificial intelligence models capable of generating images, texts and visual compositions that mimic real formats.

Forging an identity is not what it used to be. Generative artificial intelligence has introduced a new form of document impersonation. Through models that combine text and images, some elements can be imitated. Nevertheless, the technology has not yet reached the point of perfection that many fear. Generating a document that ‘looks’ real may be relatively straightforward, but maintaining consistency between all its elements, especially in terms of MRZ or advanced security features, remains a complex task for these models.

The concern is not so much the final quality of the document, which still has inconsistencies, but the speed with which an acceptable one can be generated. In just a few minutes, it is possible to construct a forged version that, if not checked with appropriate tools, could go undetected.

Nevertheless, this threat does not leave us defenceless. In the face of increasingly automated synthetic fraud, defence mechanisms are also evolving. Tools based on neural networks and machine learning algorithms make it possible to analyse not only the appearance of a document but also patterns and signals invisible to our eyes. Detection is no longer limited to visually comparing an image with a database but to reading between the lines of what an artificial generation cannot completely hide.

And this is precisely where technology such as the one we have been developing since 2009 at Mobbeel starts to play a role.

How to detect a fake ID or passport with Mobbeel’s fraud detection mechanisms

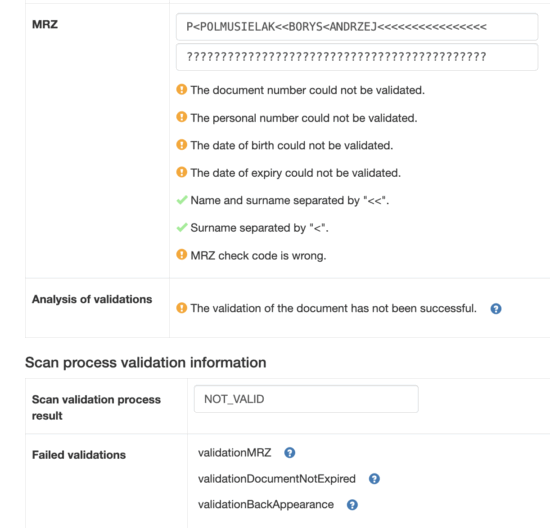

We performed a test with the passport that has sparked controversy to assess if it can bypass the controls of our digital registration solution, which incorporates an ID document capture and validation step, MobbScan.

Multiple inconsistencies were detected from the outset:

Multiple inconsistencies were detected from the outset:

- The format of the document was unknown.

- The date of birth could not be recognised or validated.

- The nationality was shown as invalid.

- The MRZ had multiple errors:

- The document number and identity could not be validated.

- The date of birth and expiry date could not be validated.

- The control code generated by the MRZ was incorrect.

All this led to one conclusion: the result was a NOT_VALID process.

This example explains how a document that, at first glance, might appear genuine, is automatically dismantled by our system when different faults are identified.

Document verification is only the first step of the process

Detecting that an ID is invalid is only one of the steps that a verification process can include. When the capture is performed, our technology analyses the image and launches a battery of automatic checks on both appearance and security to check whether the document complies with international standards and whether it has been tampered with or forged.

Using computer vision, the physical appearance of the document is inspected for tampering. The system applies a series of specific checks which, in combination, allow an overall result to be issued on the validity of the document.

Among the elements that are checked are:

- The type of document and its format.

- The typeface of the MRZ matches the official one according to the ICAO 9303 standard (OCR-B).

- The region where the facial image is located is not altered (e.g. with another image superimposed).

- Edges and corners are present, without cuts or deformations.

- All official emblems and badges are visible.

This analysis is done in milliseconds. When something does not match, as in the case of the analysed passport, the system detects it and marks it as invalid.

Biometric comparison

Once the document has been validated, a second step of facial biometrics could be incorporated. This step aims to verify that the person presenting the document is really who they claim to be.

To do this, the photo on the document is compared with a real-time capture or selfie taken by the user. This verification is performed by a biometric engine based on deep learning technologies, trained to tolerate differences such as the passage of time, changes in physical appearance or the varying quality of the images.

Furthermore, to protect the process against further spoofing attempts, mechanisms for detecting presentation attacks and injection attacks are added. These measures make it possible to identify spoofing attempts using photographs, screenshots, masks or even deepfakes. Detection is done through passive techniques without requiring additional interaction from the subject performing the process.

More guarantees? We have them

In scenarios that require higher levels of security, a third step can be added that consists of a complete recording of the process and a subsequent review by an agent.

The challenge is not fraud, it is identifying it in time

The threat of document fraud, as we know, is not new. What has changed is the way it manifests itself: faster, more massive, more automated.

Generative artificial intelligence has accelerated times and lowered the barriers to entry. However, the real risk is not just in this happening but also in not having the right mechanisms in place to detect it in time.

At Mobbeel, we have been working for years with a clear idea: it is not about guessing whether something is false or not. It is about analysing each document beyond its appearance and anticipating even frauds that have not yet occurred.

Our systems analyse multiple signals in parallel. We use neural networks specifically trained to distinguish between legitimate documents and those generated synthetically, no matter how well constructed. Inconsistencies in OCR fields, the logic of the MRZ, and even the consistency between the document photo and the user’s selfie are all analysed in a matter of seconds.

It is not just a matter of knowing if a document is fake but knowing before it causes real damage. Fraud does not need to be perfect to work; it just needs to happen once.

And that is where technology makes the difference. Verification is no longer just another stage in the process, but an active barrier that can only work if it is fuelled by knowledge, experience and technology that is sufficiently trained.

The key is not to detect fraud when it has already happened. The key is not to give it a chance to creep in.

Feel free to text us if you are looking for an identity verification solution capable of tackling identity theft with generative AI.

I am a curious mind with knowledge of laws, marketing, and business. A words alchemist, deeply in love with neuromarketing and copywriting, who helps Mobbeel to keep growing.

PRODUCT BROCHURE

Discover our identity verification solution

Verify your customers’ identities in seconds through ID document scanning and validation, and facial biometric matching with liveness detection.