An autonomous neural network applies for a commercial loan. A corporate avatar signs a contract on behalf of a company. Your digital twin interacts with partners on a video call, standing in for you. A trading algorithm negotiates derivatives in global markets.

These scenarios aren’t science fiction—they’re realities that highlight a pressing issue: the glaring void in digital identity verification.

A few weeks ago, at MWC, I interacted with Amira, a humanoid robot that became one of the event’s standout attractions. And just this week, I had a conversation with Maya, an AI from Sesame, which far surpassed my experiments with other chatbots like ChatGPT. Without a doubt, Maya’s ability to convey emotion, play with pauses, and grasp the nuances of real-time conversation gives it an uncanny—almost unsettling—sense of humanity.

Amira isn’t the only example. Elon Musk plans to make Optimus, a domestic helper robot, an affordable household fixture in the near future.

This not-so-distant future was once the stuff of films like Her, where Joaquin Phoenix’s character falls in love with his OS’s AI. Watching Amira or talking to Maya, it’s impossible not to think: That cinematic reality is closer than ever.

So, how will we distinguish AI from humans? Will AIs eventually have legal personhood? A recognised legal identity?

Legal identity for AI Agents and robots

There is an ongoing debate about whether artificial intelligences and robots should be granted legal identity in the future. This discussion focuses on philosophical, ethical, legal and technological questions, with divided positions regarding the rights and responsibilities of machines.

The European Parliament has proposed creating a legal status for robots, specifically using the category of “electronic personality” or “e-personality” for robots with autonomous and self-learning capabilities. This proposal has generated heated debate at European and international institutional levels.

According to Silvia Salardi in her work “Robotics and Artificial Intelligence: Challenges for Law”, an interesting debate has emerged around the e-personality concept, sparked by an open letter to the European Commission signed by over 150 experts, researchers and specialists in AI and robotics. The letter explicitly references the European Parliament’s request to create a legal status for robots, particularly using the revised legal category of electronic personality.

The signatories maintain that granting legal personhood to autonomous machines cannot be based on either the model of natural persons or legal entities.

In the first case (natural persons), robots would hold human rights, which would contravene the contents of the Charter of Fundamental Rights and the European Convention on Human Rights.

In the second case (legal entities), it would assume there are individuals behind the robot’s actions who represent and guide it, which would not apply to robots with autonomous and self-learning capabilities.

Undoubtedly, this remains a controversial topic with much debate still ahead. Arguments in favor of creating legal identity for AIs include:

If AIs develop autonomy, creativity and advanced decision-making, they could be considered “electronic persons” with rights and obligations. This could facilitate assigning legal responsibility for errors or damages caused by autonomous AIs.

However, many argue that AIs and robots are ultimately tools and should not be considered legal agents. Furthermore, granting rights to an AI or robot could diminish responsibility for their actions by the humans who create and control them.

Without doubt, we are facing a passionate debate with ethical and legal implications that invites us to reflect on the very identity of these technologies.

AI opens the door to identity fraud

The absence of a KYC (Know Your Customer) and AML (Anti-Money Laundering) framework for artificial intelligence creates vulnerabilities for fraud, money laundering, and identity theft.

AI has reached such a level of sophistication that it can now generate highly accurate digital replicas of any individual using deepfake technology.

The vulnerability of personal data—whether shared voluntarily or unintentionally—is becoming increasingly evident in alarming scenarios. There have already been cases where cybercriminals have impersonated individuals using AI-generated voice simulations (voice deepfakes).

But it doesn’t stop at voice. AI can now produce hyper-realistic video simulations, complete with facial expressions and mannerisms indistinguishable from the real person—even capable of bypassing banking verification systems. These forgeries can evade traditional KYC protocols, posing a significant risk to both personal and financial security.

How Do You Verify an AI Agent Without a Face, Fingerprint, or Passport?

First, it’s important to note that identity verification methods for AI agents would need to be specifically designed for them, as current systems are built to distinguish humans from machines—not one machine from another.

Moreover, the very concept of identity tied to a non-human agent—such as an AI chatbot or robot—remains controversial. The question is no longer whether we need solutions, but how to design them.

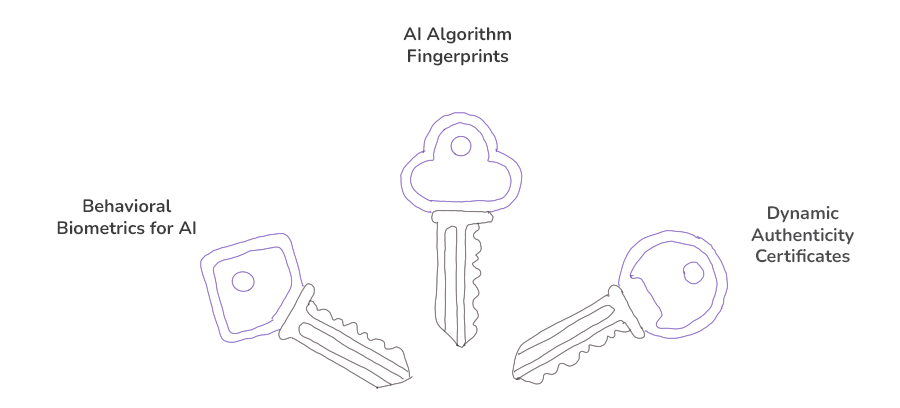

Three Pillars for AI Identity Verification

Authenticating an artificial intelligence isn’t the same as verifying a human user. It’s a complex challenge requiring a multi-layered approach—validating how the AI was built, trained, and whether its behavior aligns with expectations. Potential strategies include:

1. AI Algorithm Fingerprints

Each AI could have a unique “digital DNA” based on:

- Its neural network architecture (layers, nodes, activation functions)

- Historical decision-making patterns (recorded on an immutable blockchain)

Example: A loan-approval AI could be identified by its risk-assessment patterns.

2. Behavioral Biometrics for AI

Real-time monitoring of metrics like:

- Processing speed in critical tasks (does it match its declared design?)

- Statistical deviations in outputs (is it learning beyond authorized limits?)

Example: Detecting an “investment bot” that claims to be conservative but operates as high-risk—its behavioral footprint would expose inconsistencies.

3. Dynamic Authenticity Certificates

Digital certificates that don’t just identify an AI but track its evolution in real time, combining:

- NFTs (linked to its source code and initial parameters)

- Smart contracts (auto-updating for new capabilities or behavioral shifts)

- Timestamping (blockchain-anchored audit trails)

When an AI is created, an NFT could be minted to reflect its ethical boundaries and purpose. Unlike static certificates, this would update automatically via smart contracts—logging every major change, from new learning capabilities to unexpected deviations.

While these are just technological proposals, any viable system for AI validation would require global consensus among governments, regulators, and private entities. The challenge isn’t just technical—it’s about defining what “identity” means for non-human agents in a world where machines increasingly act autonomously.

The future: toward an Internet where humans and AI coexist with trust

Technological progress is advancing at such a rapid pace that predicting even the near future requires a significant leap of imagination. Yet one thing is clear: this debate is not just necessary—it’s already underway, and the first steps are being taken.

The future envisioned by Isaac Asimov, where robots and humans coexist seamlessly, is no longer science fiction. But with this reality comes complex ethical and moral implications. Now is the time to establish a solid foundation for the ethical development of technology.

The European Union has taken a pioneering role with the AI Act, the world’s first comprehensive law regulating the design and use of artificial intelligence.

Who hasn’t heard of Mika, the world’s first AI CEO?

What happens when a lawyer negotiates and signs an agreement with an AI assistant?

These questions only lead to more questions—ones that will require answers from all sectors, both governmental and private.

At Mobbeel, we’ll continue monitoring these developments closely, working to build a safer digital world. We’re AI experts using AI to combat AI-powered fraud—because the future of trust depends on staying one step ahead.

Contact our team if you want to implement robust identity verification mechanisms in your organisation.

I’m a Software Engineer with a passion for Marketing, Communication, and helping companies expand internationally—areas I’m currently focused on as CMO at Mobbeel. I’m a mix of many things, some good, some not so much… perfectly imperfect.

GUÍA

Identifica a tus usuarios mediante su cara

En esta dualidad analógico-digital, uno de los procesos que sigue siendo crucial para garantizar la seguridad es la verificación de identidad a través del reconocimiento facial. La cara, siendo el espejo del alma, proporciona una defensa única contra el fraude, aportando fiabilidad al proceso de identificación.